A few years ago, I was really bothered by an academic paper. Some researchers in France put together a comparison showing lots of ways you could implement realtime collaborative editing (like Google Docs). They implemented lots of algorithms - CRDTs and OT algorithms and stuff. And they benchmarked them all to see how they perform. (Cool!!) Some algorithms worked reasonably well. But others took upwards of 3 seconds to process simple paste operations from their editing sessions. Yikes! Which algorithm was that? Well, this is awkward but .. it was mine. I mean, I didn't invent it - but it was the algorithm I was using for ShareJS. The algorithm we used for Google Wave. The algorithm which - hang on - I knew for a fact didn't take 3 seconds to process large paste events. What's going on here?

I took a closer look at the paper. In their implementation when a user pasted a big chunk of text (like 1000 characters), instead of creating 1 operation with 1000 characters, their code split the insert into 1000 individual operations. And each of those operations needed to be processed separately. Do'h - of course it'll be slow if you do that! This isn't a problem with the operational transformation algorithm. This is just a problem with their particular implementation. The infuriating part was that several people sent me links to the paper and (pointedly) asked me what I think about it. Written up as a Published Science Paper, these speed comparisons seemed like a Fact About The Universe. And not what they really were - implementation details of some java code, written by a probably overstretched grad student. One of a whole bunch of implementations that they needed to code up. "Nooo! The peer-reviewed science isn't right everybody! Please believe me!". But I didn't have a published paper justifying my claims. I had working code but it felt like none of the smart computer science people cared about that. Who was I? I was nobody.

Even talking about this stuff we have a language problem. We describe each system as an "algorithm". Jupiter is an Algorithm. RGA is an Algorithm. But really there are two very separate aspects: If some academic's code runs slowly, what does that actually teach us? Maybe it's like tests. A passing test suite suggests, but can never prove that there are no bugs. Likewise, a slow implementation suggests, but can never prove that every implementation of the system will be slow. If you wait long enough, somebody will find more bugs. And, maybe, someone out there can design a faster implementation.

Years ago I translated my old text OT code into C, Javascript, Go, Rust, and Swift. Each implementation has the same behaviour, and the same algorithm. But the performance is not even close. In javascript, my transform function ran about 100,000 times per second. Not bad! But the same function in C does 20M iterations per second. That's 200x faster. Wow! Were the academics testing a slow version or the fast version of this code? Maybe, without noticing, they had fast versions of some algorithms and slow versions of others. It's impossible to tell from the paper!

Making CRDTs Fast

Making CRDTs Fast

So as you may know, I've been getting interested in CRDTs lately. For the uninitiated, CRDTs (Conflict-Free Replicated Data types) are fancy programming tools which let multiple users edit the same data at the same time. They let you work locally with no lag. (You don't even have to be online). And when you do sync up with other users & devices, everything just magically syncs up and becomes eventually consistent. The best part of CRDTs is that they can do all that without even needing a centralized computer in the cloud to monitor and control everything. I want Google Docs without google. I want my apps to seamlessly share data between all my devices, without me needing to rely on some flakey startup's servers to still be around in another decade. I think they're the future of collaborative editing. And maybe the future of all software - but I'm not ready to talk about that yet.

But most CRDTs you read about in academic papers are crazy slow. A decade ago I decided to stop reading academic papers and dismissed them. I assumed CRDTs had some inherent problem. A GUID for every character? Nought but madness comes from those strange lands! But - and this is awkward to admit - I think I've been making the same mistake as those researchers. I was reading papers which described the behaviour of different systems. And I assumed that meant we knew how the best way to implement those systems. And wow, I was super wrong. How wrong? Well. Running this editing trace, Automerge (a popular CRDT, written by a popular researcher) takes nearly 5 minutes to run. I have a new implementation that can process the same editing trace in 56 milliseconds. That's 0.056 seconds, which is over 5000x faster. It's the largest speed up I've ever gotten from optimization work - and I'm utterly delighted by it.

What is Automerge?

What is Automerge?

Automerge is a library to help you do collaborative editing. It's written by Martin Kleppmann, who's a little bit famous from his book and excellent talks. Automerge is based on an algorithm called RGA, which you can read about in an academic paper if you're into that sort of thing. Martin explains automerge far better than I will in this talk from 2020: Automerge (and Yjs and other CRDTs) think of a shared document as a list of characters. Each character in the document gets a unique ID, and whenever you insert into the document, you name what you're inserting after. Imagine I type "abc" into an empty document. Automerge creates 3 items:

Automerge creates 3 items when typing "abc" into an empty document.

Let's say Mike inserts an 'X' between a and b, so we get "aXbc". Then we have:

Automerge tree structure after inserting 'X' between 'a' and 'b'.

Note the 'X' and 'b' both share the same parent. This will happen when users type concurrently in the same location in the document. But how do we figure out which character goes first? We could just sort using their agent IDs or something. But argh, if we do that the document could end up as abcX, even though Mike inserted X before the b. That would be really confusing. Automerge (RGA) solves this with a neat hack. It adds an extra integer to each item called a sequence number. Whenever you insert something, you set the new item's sequence number to be 1 bigger than the biggest sequence number you've ever seen:

This is the algorithmic version of "Wow I saw a sequence number, and it was this big!" "Yeah? Mine is even bigger!" The rule is that children are sorted first based on their sequence numbers (bigger sequence number first). If the sequence numbers match, the changes must be concurrent. In that case, we can sort them arbitrarily based on their agent IDs. (We do it this way so all peers end up with the same resulting document.)

Improving the Data Structure

Improving the Data Structure

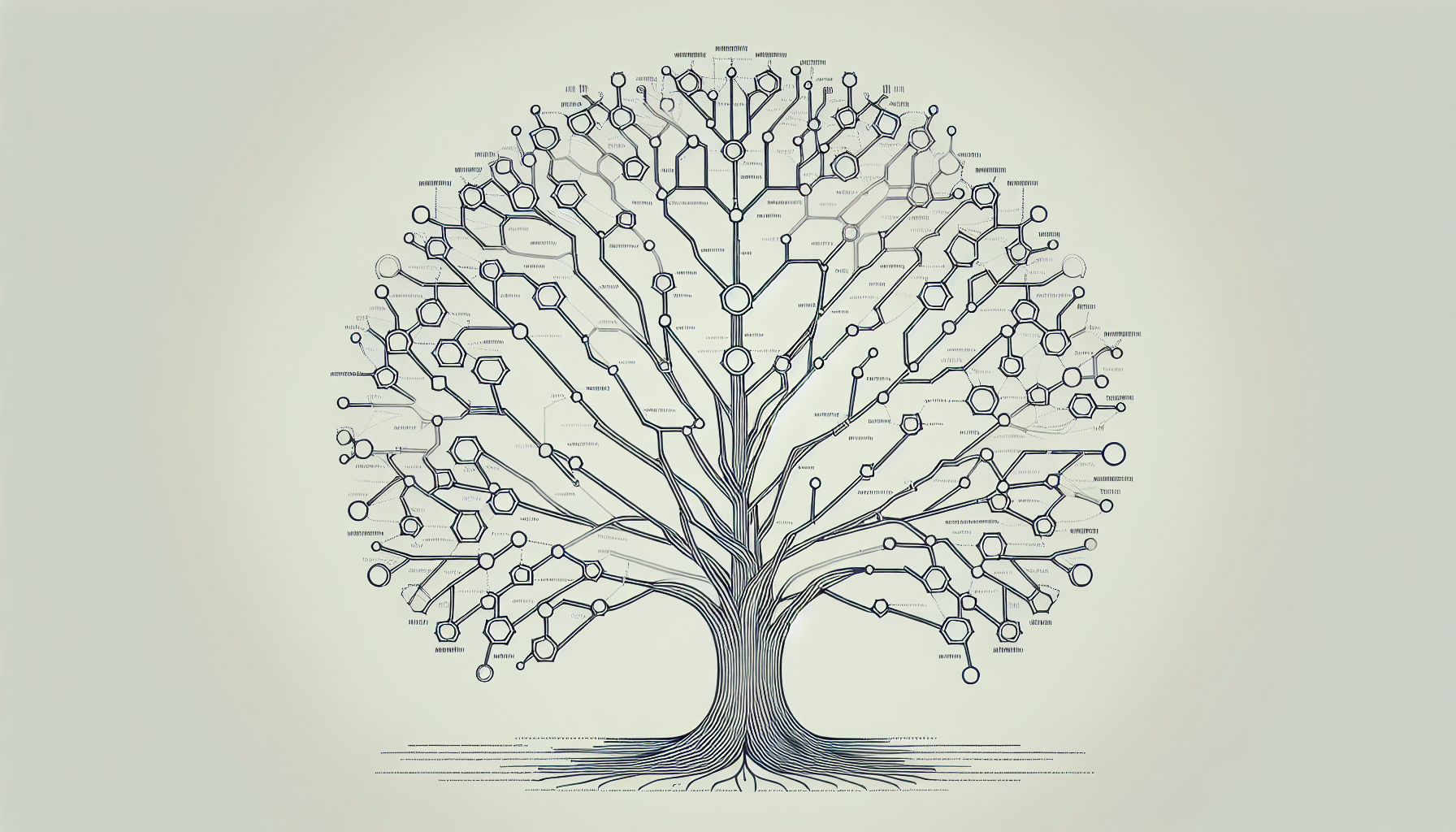

Luckily, there's a better way to implement CRDTs, pioneered in Yjs. Yjs is another (competing) open-source CRDT implementation made by Kevin Jahns. It's fast, well documented, and well made. If I were going to build software which supports collaborative editing today, I'd use Yjs. Yjs doesn't need a whole blog post talking about how to make it fast because it's already pretty fast, as we'll see soon. It got there by using a clever, obvious data structure "trick" that I don't think anyone else in the field has noticed. Instead of implementing the CRDT as a tree like automerge does: Yjs just puts all the items in a single flat list:

Yjs uses a flat list instead of a tree structure.

That looks simple, but how do you insert a new item into a list? With automerge it's easy:

Inserting a new item in Automerge.

But with this list approach it's more complicated:

Inserting a new item in Yjs.

Essentially, this approach is just a fancy insertion sort. We're implementing a list CRDT with a list. Genius! This sounds complicated - how do you figure out where the new item should go? But it's complicated in the same way math is complicated. It's hard to understand, but once you understand it, you can implement the whole insert function in about 20 lines of code:

Insertion code for the list CRDT.

Death by 1000 Scans

Death by 1000 Scans

We're using a clean and fast core data abstraction now, but the implementation is still not fast. There are two big performance bottlenecks in this codebase we need to fix: (These lines are marked (1) and (2) in the code listing above). To understand why this code is necessary, let's say we have a document, which is a list of items. And some of those items might have been deleted. I've added an isDeleted flag to mark which ones. (Unfortunately, we can't just remove them from the array because other inserts might depend on them. Drat! But that's a problem for another day.) Imagine the document has 150,000 array items in it, representing 100,000 characters which haven't been deleted. If the user types an 'a' in the middle of the document (at document position 50,000), what index does that correspond to in our array? To find out, we need to scan through the document (skipping deleted items) to figure out the right array location. So if the user inserts at position 50,000, we'll probably have to linearly scan past 75,000 items or something to find the insert position. Yikes!

Changing the Data Structure

Changing the Data Structure

Can we fix this? Yes, we can! And by "we", I mean Kevin fixed these problems in Yjs. How did he manage that? So remember, there are two problems to fix: Kevin solved the first problem by thinking about how humans actually edit text documents. Usually, while we're typing, we don't actually bounce around a document very much. Rather than scanning the document each time an edit happens, Yjs caches the last (index, position) pair where the user made an edit. The next edit will probably be pretty close to the previous edit, so Kevin just scans forwards or backwards from the last editing position. This sounds a little bit dodgy to me - I mean, that's a big assumption to make! What if edits happen randomly?! But people don't actually edit documents randomly, so it works great in practice. (What if two users are editing different parts of a document at the same time? Yjs actually stores a whole set of cached locations, so there's almost always a cached cursor location near each user no matter where they're making changes in the document.)

Faster than Javascript

Faster than Javascript

When I told Kevin that I thought I could make a CRDT implementation that's way faster than Yjs, he didn't believe me. He said Yjs was already so well optimized, going a lot faster probably wasn't possible. "Maybe a little faster if you just port it to Rust. But not a lot faster! V8 is really fast these days!!" But I knew something Kevin didn't know: I knew about memory fragmentation and caches. Rust isn't just faster. It's also a lower-level language, and that gives us the tools we need to control allocations and memory layout. Kevin knows this now too, and he's working on Yrs to see if he can claim the performance crown back.

Conclusion

Conclusion

That silly academic paper I read all those years ago says some CRDTs and OT algorithms are slow. And everyone believed the paper, because it was Published Science. But the paper was wrong. As I've shown, we can make CRDTs fast. We can make them crazy fast if we get creative with our implementation strategies. With the right approach, we can make CRDTs so fast that we can compete with the performance of native strings. The performance numbers in that paper weren't just wrong. They were "a billionaire guessing a banana costs $1000" kind of wrong. But you know what? I sort of appreciate that paper now. Their mistake is ok. It's human. I used to feel inadequate around academics - maybe I'll never be that smart! But this whole thing made me realize something obvious: Scientists aren't gods, sent from the heavens with the gift of Truth. No, they're beautiful, flawed people just like the rest of us mooks. Great at whatever we obsess over, but kind of middling everywhere else. I can optimize code pretty well, but I still get zucchini and cucumber mixed up. And, no matter the teasing I get from my friends, that's ok.

A decade ago Google Wave really needed a good quality list CRDT. I got super excited when the papers for CRDTs started to emerge. LOGOOT and WOOT seemed like a big deal! But that excitement died when I realized the algorithms were too slow and inefficient to be practically useful. And I made a big mistake - I assumed if the academics couldn't make them fast, nobody could. But sometimes the best work comes out of a collaboration between people with different skills. I'm terrible at academic papers, I'm pretty good at making code run fast. And yet here, in my own field, I didn't even try to help. The researchers were doing their part to make P2P collaborative editing work. And I just thumbed my nose at them all and kept working on Operational Transform. If I helped out, maybe we would have had fast, workable CRDTs for text editing a decade ago. Oops! It turned out collaborative editing needed a collaboration between all of us. How ironic! Who could have guessed?!

Remember these 3 key ideas for your startup:

Remember these 3 key ideas for your startup:

- Leverage CRDTs for Seamless Collaboration: CRDTs allow multiple users to edit the same data simultaneously without requiring a centralized server. This can significantly enhance the collaborative capabilities of your applications, making them more resilient and efficient.

- Optimize Performance with the Right Data Structures: As demonstrated by the Yjs implementation, using a flat list instead of a tree structure can drastically improve performance. This approach can make your applications 30x faster and more memory-efficient, ensuring a smoother user experience.

- Invest in Modern Technologies like Rust and WebAssembly: By using languages like Rust, you can gain finer control over memory allocation and performance. This can make your applications 5000x faster than traditional implementations.

Edworking is the best and smartest decision for SMEs and startups to be more productive. Edworking is a FREE superapp of productivity that includes all you need for work powered by AI in the same superapp, connecting Task Management, Docs, Chat, Videocall, and File Management. Save money today by not paying for Slack, Trello, Dropbox, Zoom, and Notion.

For more details, see the original source.