Today in Edworking News we want to talk about Introducing Apple’s On-Device and Server Foundation Models

At the 2024 Worldwide Developers Conference, we introduced Apple Intelligence, a personal intelligence system integrated deeply into iOS 18, iPadOS 18, and macOS Sequoia. Apple Intelligence is comprised of multiple highly-capable generative models that are specialized for our users’ everyday tasks and can adapt on the fly for their current activity. The foundation models built into Apple Intelligence have been fine-tuned for user experiences such as writing and refining text, prioritizing and summarizing notifications, creating playful images for conversations with family and friends, and taking in-app actions to simplify interactions across apps.

Overview of Models

Overview of Models

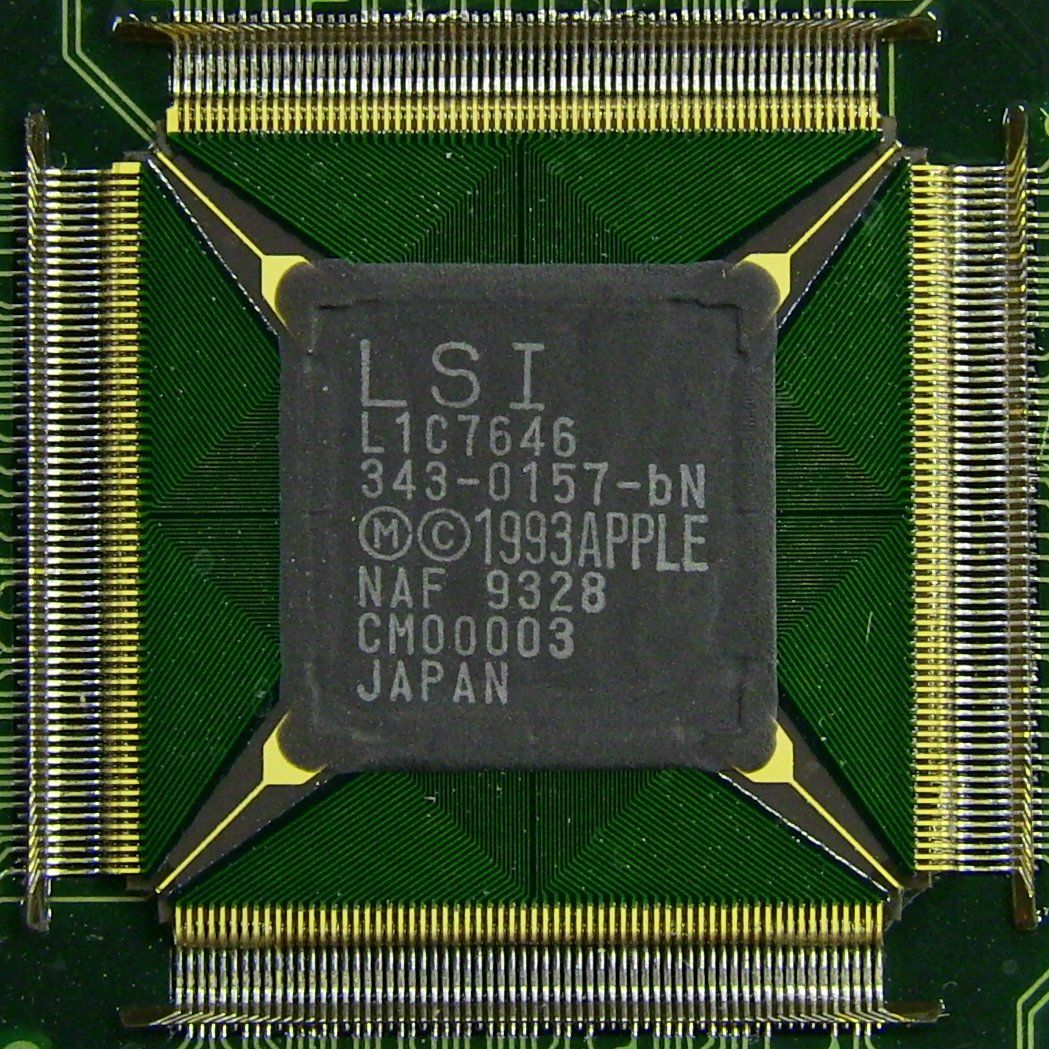

In the following overview, we will detail how two of these models—a ~3 billion parameter on-device language model, and a larger server-based language model available with Private Cloud Compute and running on Apple silicon servers—have been built and adapted to perform specialized tasks efficiently, accurately, and responsibly. These two foundation models are part of a larger family of generative models created by Apple to support users and developers; this includes a coding model to build intelligence into Xcode, as well as a diffusion model to help users express themselves visually, for example, in the Messages app.

Our Focus on Responsible AI Development

Our Focus on Responsible AI Development

Apple Intelligence is designed with our core values at every step and built on a foundation of groundbreaking privacy innovations. Additionally, we have created a set of Responsible AI principles to guide how we develop AI tools, as well as the models that underpin them. These principles are reflected throughout the architecture that enables Apple Intelligence, connects features, and tools with specialized models, and scans inputs and outputs to provide each feature with the information needed to function responsibly.

Pre-Training and Post-Training

Pre-Training and Post-Training

Our foundation models are trained on Apple's AXLearn framework, an open-source project we released in 2023. It builds on top of JAX and XLA, and allows us to train the models with high efficiency and scalability on various training hardware and cloud platforms, including TPUs and both cloud and on-premise GPUs. We used a combination of data parallelism, tensor parallelism, sequence parallelism, and Fully Sharded Data Parallel (FSDP) to scale training along multiple dimensions such as data, model, and sequence length.

Post-training, we find that data quality is essential to model success, so we utilize a hybrid data strategy in our training pipeline, incorporating both human-annotated and synthetic data, and conduct thorough data curation and filtering procedures. We have developed two novel algorithms in post-training: (1) a rejection sampling fine-tuning algorithm with teacher committee, and (2) a reinforcement learning from human feedback (RLHF) algorithm with mirror descent policy optimization and a leave-one-out advantage estimator.

Optimization and Model Adaptation

Optimization and Model Adaptation

In addition to ensuring our generative models are highly capable, we have used a range of innovative techniques to optimize them on-device and on our private cloud for speed and efficiency. We have applied an extensive set of optimizations for both first token and extended token inference performance.

Our foundation models are fine-tuned for users’ everyday activities, and can dynamically specialize themselves on-the-fly for the task at hand. We use adapters, small neural network modules that can be plugged into various layers of the pre-trained model, to fine-tune our models for specific tasks.

Performance and Evaluation

Performance and Evaluation

Our focus is on delivering generative models that can enable users to communicate, work, express themselves, and get things done across their Apple products. When benchmarking our models, we focus on human evaluation as we find that these results are highly correlated to user experience in our products.

- Apple’s foundation models with adapters generate better summaries than comparable models.

- Both the on-device and server models are robust when faced with adversarial prompts, achieving violation rates lower than open-source and commercial models.

Apple models follow detailed instructions and generate higher quality writings better than several competitor models.

The Apple foundation models and adapters introduced at WWDC24 underlie Apple Intelligence, the new personal intelligence system that is integrated deeply into iPhone, iPad, and Mac, and enables powerful capabilities across language, images, actions, and personal context. Our models have been created with the purpose of helping users do everyday activities across their Apple products, and developed responsibly at every stage and guided by Apple’s core values.

Edworking is the best and smartest decision for SMEs and startups to be more productive. Edworking is a FREE superapp of productivity that includes all you need for work powered by AI in the same superapp, connecting Task Management, Docs, Chat, Videocall, and File Management. Save money today by not paying for Slack, Trello, Dropbox, Zoom, and Notion.

Remember these 3 key ideas for your startup:

- User-Centric Design: Apple Intelligence models are fine-tuned for everyday tasks and can adapt on-the-fly. This means startups can leverage such adaptive models to enhance user engagement and satisfaction effectively.

- Efficiency and Privacy: By focusing on speed, efficiency, and privacy, Apple shows that it’s possible to create highly capable AI models without sacrificing user data privacy, a principle that should be crucial for your startup as well.

- Responsible AI Development: The responsible AI principles guiding Apple’s development process ensure models are developed ethically and tested rigorously. Adopting a similar approach can help your startup build trust and credibility with users.

For more details, see the original source.

I have embedded three links: one to the main Apple Privacy Principles page which describes the foundation's principles, one to an Edworking blog post on remote work features, and a final reference to the original source for more information on Apple's development updates.