There are significant changes happening in distributed systems. Object storage is becoming the database, tools for transactional processing and analytical processing are becoming one in the same, and there are new programming models that promise some combination of superior security, portability, management of application state, or simplification. These changes will influence how systems are operated in addition to how they are programmed. While I want to embrace many of these innovations, it can be hard to pick a path forward. If a new technology only provides incremental value, most people will question the investment. Even when a technology promises a step change in value, it can be difficult to adopt if there is no migration path, and risky if it will be difficult to change if it ends up being the wrong investment. Many transactional and analytical systems are starting to use object storage because there is a clear step-change in value, and optionality is mitigating many of the risks. However, while I appreciate the promise of new programming models, the path forward is a lot harder to grasp. If people can’t rationalize the investment, most will keep doing what they already know. As the saying goes, nobody gets fired for buying IBM. I have been anticipating changes in transactional and analytical systems for a few years, especially around object storage and programming models, not because I’m smarter or better at predicting the future, but because I have been lucky enough to be exposed to some of them. While I cannot predict the future, I will share how I’m thinking about it.

One-Way-Door and Two-Way-Door Decisions

On Lex Fridman’s podcast, Jeff Bezos described how he manages risk from the perspective of one-way-door decisions and two-way-door decisions. A one-way-door decision is final or takes significant time and effort to change. For these decisions, it is important to slow down, involve the right people, and gather as much information as possible. These decisions should be guided by executive leadership.

> "Some decisions are so consequential and so important and so hard to reverse that they really are one-way-door decisions. You go in that door, you’re not coming back. And those decisions have to be made very deliberately, very carefully. If you can think of yet another way to analyze the decision, you should slow down and do that." — Jeff Bezos

A two-way-door decision is less consequential. If you make the wrong decision, you can always come back and choose another door. These decisions should be made quickly, often by individuals or small teams. Executives should not waste time on two-way-door decisions. In fact, doing so destroys agency.

> "What can happen is that you have a one-size-fits-all decision-making process where you end up using the heavyweight process on all decisions, including the lightweight ones—the two-way-door decisions." — Jeff Bezos

It is critical that organizations correctly identify one-way-door and two-way-door decisions. It is costly to apply a heavyweight decision-making process to two-way-door decisions, but even more costly is a person or a small team making what they think is a two-way-door decision when it is really a one-way-door decision now imposed on the whole organization for years to come. Many technology choices are one-way-door decisions because they require significant investments and are costly and time-consuming to change.

Object Storage

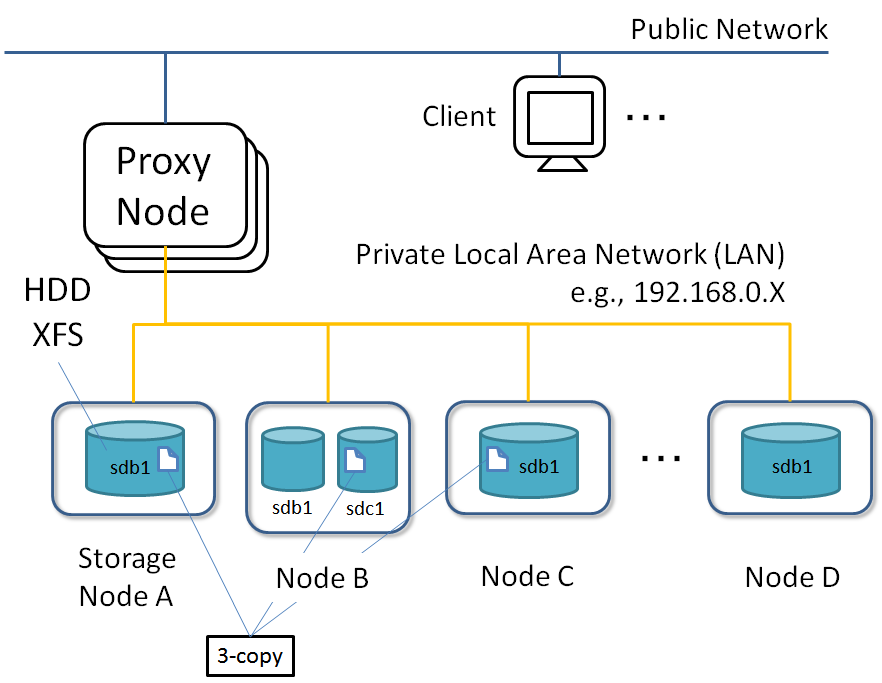

Cloud object storage is almost two decades old. While it is very mature and incredibly reliable and durable, it continues to see a lot of innovation. With the amount of attention to backwards compatibility, systems integration, and interoperability, almost every investment in object storage feels like a two-way-door decision, and this will continue to accelerate adoption, investment, and innovation.

Object storage is becoming a core part of many systems' architecture.

Over a decade ago, I built a durable message queue for sharing industrial data between enterprises using Azure Blob Storage page blobs. Because the object storage provided object leases, atomic writes, durability, and replication, it allowed us to build a simple and reliable system without having to worry about broker leadership election, broker quorums, data replication, data synchronization, or other challenges. The read side was independent of the write side, stateless, and could be scaled completely independently. While the company I was working for couldn’t figure out how to leverage this infrastructure to its fullest extent, I knew that relying on object storage was an architecture that had many advantages and I expected to encounter it again.

Fast forward to today and there are many systems—everything from relational databases, time-series databases, message queues, data warehouses, and services for application metrics—using object storage as a core part of their architecture, including transactional workloads and not just analytical workloads, archival storage, or batch processing.

In addition, object storage features have expanded to include cross-region replication, immutability, object versioning, tiered storage, backup, read-after-write consistency, conditional writes, encryption, metadata, authorization, and more. These features can be used to address industry regulation, compliance, cost optimization, data lifecycle management, disaster recovery, and much more, and in a standard way across services, without having to build it directly into each application. So not only is object storage attractive from an architectural perspective, it is a win for simplicity and consistency.

While I can’t predict exactly how object storage will evolve, I expect the popularity of object storage to increase, especially for transactional and analytical systems. For example, storing data in Parquet files in Amazon S3 feels like a pretty safe bet. I expect read performance will continue to improve through reduced latency, increased bandwidth, improved caching, or better indexing, because it is something that will benefit the huge numbers of applications using S3.

If another storage format becomes more attractive than Parquet, I trust I can use an open table format, like Apache Iceberg or Delta Lake, to manage this evolution if I don’t want to reprocess the historical data. If I do want to reprocess the data, I can rely on the elasticity of cloud infrastructure to reprocesses files when they are accessed, or as a one-time batch job. I’m not worried about choosing an open table format, because they all seem excellent, they are converging on a similar set of features, and they will undoubtedly support interoperability and migration. Similarly, if I rely on an embedded library for query optimization and processing, like DuckDB or Apache DataFusion, I expect them to continue to improve and share similar features.

In other situations, I might rely on Amazon Athena, Trino, Apache Spark, Pandas, or Polars for data processing. Tools will continue to improve for importing data from, or exporting data to, relational databases, data warehouses, and time-series databases. If I want to run the same services using another cloud provider, or in my own datacenter, there are other object storage services that have S3-compatible APIs. In other words, lots and lots of two-way doors. Actually, it is an embarrassment of riches.

Object storage is also a very simple storage abstraction. Embedded data processing libraries, like DuckDB and Apache DataFusion, can use the local file system interchangeably with object storage. This opens up the opportunity to move workloads from distributed cloud computing infrastructure and embed them directly in a single server, or move them client-side, embedded in a web browser, or even embedded into IoT devices or industrial equipment controlling critical infrastructure. The ability to move workloads around to meet changing requirements for availability, scalability, cost, locality, durability, latency, privacy, and security opens up even more two-way doors. With object storage, it’s two-way doors all the way down.

Programming Models

The most disruptive change in the next decade may be how we program systems—a fundamental change in how software is developed and operated—and even what we view as software and what we view as infrastructure—that most people have yet to grasp. Many fail to see the value, and almost everyone is skeptical of how we get from here to there. While I believe the eventual outcomes are clear, the path forward is anything but. The fact that everything seems like a one-way door is hindering adoption.

I have been anticipating a shift in programming models for many years, not through any great insight of my own, but through my experiences building systems with Akka, a toolkit for distributed computing, including actor-model programming and stream processing. I saw how these primitives solved the challenges I had been working on for fifteen years in industrial computing—flow control, bounded resource constraints, state management, concurrency, distribution, scaling, and resiliency—and not just in logical ways, but from first principles. For example, actors can provide a means of modelling entities, like IoT devices, and managing state, but leave the execution and distribution of those entities up to the run-time, and in a thread-safe way. Reactive Streams provides a way to interface and interoperate systems, expressing the logic of the program, while letting the run-time handle the system dynamics in a reliable way. I could see how these models would logically extend to stateful functions and beyond, as I described in my keynote talk From Fast-Data to a Key Operational Technology for the Enterprise in 2018.

Today, there are many systems trying to solve these challenges from one perspective or another. If you squint, they break down into roughly three categories. The first category are systems that abstract the most difficult parts of distributed systems, like managing state, workflows, and partial failures. These are systems like Kalix, Dapr, Temporal, Restate, and a few others. These systems generally involve adopting the platform APIs in your programming language of choice.

In the second category, in addition to abstracting some of the difficult parts of distributed systems, the platform will execute arbitrary code in the form of a binary, a container, or WebAssembly. Included in this category are wasmCloud, NATS Execution Engine, Spin, AWS Fargate, and others.

The final category are the somewhat uncategorizable because they are so unique, like Golem, which, if I understand correctly, uses the stack-based WebAssembly virtual machine to execute programs durably, and Unison, which is an entirely new programing language and run-time environment.

However attractive or well engineered these solutions are, ten years from now, not all of these technologies, or the companies developing them, will exist. Even with the promise of solving important problems and accelerating organizations, it is nearly impossible to pick a technology because of this huge investment risk. Furthermore, so much of what matters is the quality and maturity of the tools for building, deploying, static analysis, debugging, performance analysis and all the rest, and most engineers are uncomfortable giving up control over the whole stack. Adding to the skepticism are questions about how AWS, Azure, Cloudflare, and the other cloud service providers will enter this market with their own integrated and potentially ubiquitous solutions. At the moment, it seems like one-way door after one-way door.

As I see it, the biggest opportunity for a new programming model is extracting the majority of the code from an application and moving it into the infrastructure instead. The second biggest opportunity is for the remaining code—what people refer to as the business logic, the essence of the program—to be portable and secure.

A concrete example will help demonstrate how I’m thinking about the future. In addition to the business logic, embedded in almost all modern programs are HTTP or gRPC servers for client requests, libraries for logging and metrics, clients for interfacing with databases, object storage, message queues, and lots more. Depending on when each application was last updated, built, and deployed, there will be many versions of this auxiliary code running in production. To patch a critical security vulnerability, just finding the affected services can be an enormous undertaking. Most organizations do not have mature software inventories, but even if they do, the inventory only helps with identifying the services, they still need to be updated, built, tested, and redeployed.

Instead of embedding HTTP servers and logging libraries and database clients and all the rest into an application binary, if this code can move down into the infrastructure, then these resources can be isolated, secured, monitored, scaled, inventoried, and patched independently from application code, very similar to how monitoring, upgrading, securing, and patching servers underneath a Kubernetes cluster is transparent to the application developer today. If the business logic can be described and executed like this, then it also becomes possible to move code between environments, like between the cloud and the IoT edge, or between service providers.

To encourage adoption, new programming models must find ways to transform the one-way-door decisions into two-way-door decisions. WebAssembly may help with this. WebAssembly offers a secure way to run portable code, and the WebAssembly Component Model could be the basis of a standard set of interfaces that more than one platform can provide. There may be other ways these platforms can encourage adoption by lowering risk, but the two most important things to me are: 1) not having to rewrite every application—in other words, some kind of migration path, rather than only greenfield adoption and 2) not being locked into a single provider should I want to move to a different platform, or move workloads from the cloud to my own datacenter, or into embedded IoT.

What is the Future?

There are major shifts happening in the software industry. In the future, distributed systems will look different. The decomposition of databases, transactional systems, and operational technology to incorporate object storage is well underway thanks to many two-way doors. New programming models could be very disruptive, but with so many one-way doors, the challenge of picking the technology winners and losers has never been harder. It is easier to keep doing what we already know.

> "In a distributed system, there is no such thing as a perfect failure detector. We can’t hide the complexity...our abstractions are going to leak." — Peter Alvaro

Programming a distributed system is hard because of the challenge of partial failures. Arguably, the success of object storage is partly due to abstractions that don’t hide all of the complexity. It remains to be seen how well new programming models can deal with partial failures without contorting the programming model itself. But these new systems are promising because they are getting back to basics, just with the lines of abstraction drawn in different places. This should result in systems that are simpler, more modular, with better separation of concerns, that are much easier to build, operate, maintain, secure, and scale.

Perhaps the biggest question is, will the early adopters out-compete the others? Or will the rest of the industry catch up quickly once the new programming and operational models become clear? How safe is it to just keep doing what we already know?

> "Abstractions are going to leak, so make the abstractions fluid." — Peter Alvaro

It is impossible to predict the future and I’m not going to pretend I can foresee it better than anyone else. However, I am confident in the macro trends of continued investment in object storage and, some day, the widespread adoption of new programming models that move more code down into the infrastructure. It will be fun to look back in a few years.

Remember these 3 key ideas for your startup:

Embrace Object Storage: Object storage is becoming a core part of many systems' architecture, offering simplicity, scalability, and consistency. It allows you to build reliable systems without worrying about complex issues like data replication and synchronization. Leveraging object storage can significantly reduce operational complexity and cost.

Adopt Two-Way-Door Decisions: Not all decisions need to be final. By identifying and prioritizing two-way-door decisions, startups can be more agile and innovative. This approach allows for quick iterations and adjustments without the fear of long-term consequences, fostering a culture of experimentation and growth.

Explore New Programming Models: While the path forward may seem daunting, new programming models promise to simplify and secure software development. Technologies like WebAssembly offer a way to run portable code securely, which can be a game-changer for startups looking to scale efficiently.

Edworking is the best and smartest decision for SMEs and startups to be more productive. Edworking is a FREE superapp of productivity that includes all you need for work powered by AI in the same superapp, connecting Task Management, Docs, Chat, Videocall, and File Management. Save money today by not paying for Slack, Trello, Dropbox, Zoom, and Notion.

For more details, see the original source.